I am scared of Facebook. The company’s ambition, its ruthlessness, and its lack of a moral compass scare me.

It’s worth saying ‘Don’t be evil,’ because lots of businesses are. This is especially an issue in the world of the internet. Internet companies are working in a field that is poorly understood (if understood at all) by customers and regulators. The stuff they’re doing, if they’re any good at all, is by definition new. In that overlapping area of novelty and ignorance and unregulation, it’s well worth reminding employees not to be evil, because if the company succeeds and grows, plenty of chances to be evil are going to come along.

In the open air, fake news can be debated and exposed; on Facebook, if you aren’t a member of the community being served the lies, you’re quite likely never to know that they are in circulation. It’s crucial to this that Facebook has no financial interest in telling the truth.

misinformation is in fact spread in a variety of ways:

Information (or Influence) Operations – Actions taken by governments or organised non-state actors to distort domestic or foreign political sentiment.

False News – News articles that purport to be factual, but which contain intentional misstatements of fact with the intention to arouse passions, attract viewership, or deceive.

False Amplifiers – Co-ordinated activity by inauthentic accounts with the intent of manipulating political discussion (e.g. by discouraging specific parties from participating in discussion, or amplifying sensationalistic voices over others).

Disinformation – Inaccurate or manipulated information/content that is spread intentionally. This can include false news, or it can involve more subtle methods, such as false flag operations, feeding inaccurate quotes or stories to innocent intermediaries, or knowingly amplifying biased or misleading information.

For all the talk about connecting people, building community, and believing in people, Facebook is an advertising company. … Facebook is in the surveillance business. … What Facebook does is watch you, and then use what it knows about you and your behaviour to sell ads.

Since there is so much content posted on the site, the algorithms used to filter and direct that content are the thing that determines what you see: people think their news feed is largely to do with their friends and interests, and it sort of is, with the crucial proviso that it is their friends and interests as mediated by the commercial interests of Facebook. Your eyes are directed towards the place where they are most valuable for Facebook.

It’s sort of funny, and also sort of grotesque, that an unprecedentedly huge apparatus of consumer surveillance is fine, apparently, but an unprecedentedly huge apparatus of consumer surveillance which results in some people paying higher prices may well be illegal.

In developed countries where Facebook has been present for years, use of the site peaks at about 75 per cent of the population (that’s in the US). That would imply a total potential audience for Facebook of 1.95 billion. At two billion monthly active users, Facebook has already gone past that number, and is running out of connected humans.

Whatever comes next will take us back to those two pillars of the company, growth and monetisation. Growth can only come from connecting new areas of the planet. … Here in the rich world, the focus is more on monetisation

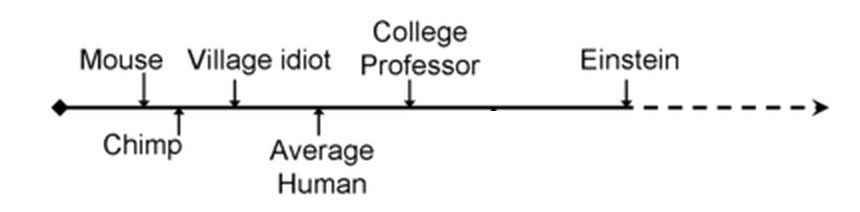

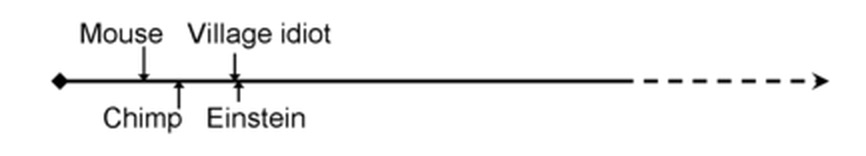

Automation and artificial intelligence are going to have a big impact in all kinds of worlds. These technologies are new and real and they are coming soon. Facebook is deeply interested in these trends. We don’t know where this is going, we don’t know what the social costs and consequences will be, we don’t know what will be the next area of life to be hollowed out, the next business model to be destroyed, the next company to go the way of Polaroid or the next business to go the way of journalism or the next set of tools and techniques to become available to the people who used Facebook to manipulate the elections of 2016. We just don’t know what’s next, but we know it’s likely to be consequential, and that a big part will be played by the world’s biggest social network. On the evidence of Facebook’s actions so far, it’s impossible to face this prospect without unease.