Source: How the Enlightenment Ends – The Atlantic, by Henry A. Kissinger

Philosophically, intellectually—in every way—human society is unprepared for the rise of artificial intelligence.

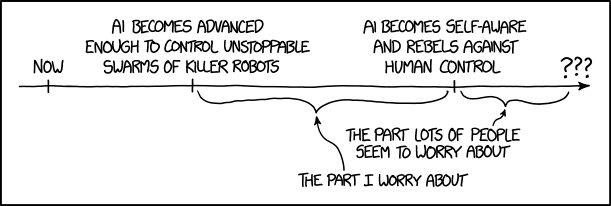

What would be the impact on history of self-learning machines—machines that acquired knowledge by processes particular to themselves, and applied that knowledge to ends for which there may be no category of human understanding? … How would choices be made among emerging options?

If AI learns exponentially faster than humans, we must expect it to accelerate, also exponentially, the trial-and-error process by which human decisions are generally made: to make mistakes faster and of greater magnitude than humans do. It may be impossible to temper those mistakes, as researchers in AI often suggest, by including in a program caveats requiring “ethical” or “reasonable” outcomes. Entire academic disciplines have arisen out of humanity’s inability to agree upon how to define these terms. Should AI therefore become their arbiter?

Ultimately, the term artificial intelligence may be a misnomer. To be sure, these machines can solve complex, seemingly abstract problems that had previously yielded only to human cognition. But what they do uniquely is not thinking as heretofore conceived and experienced. Rather, it is unprecedented memorization and computation. Because of its inherent superiority in these fields, AI is likely to win any game assigned to it. But for our purposes as humans, the games are not only about winning; they are about thinking. By treating a mathematical process as if it were a thought process, and either trying to mimic that process ourselves or merely accepting the results, we are in danger of losing the capacity that has been the essence of human cognition.