Source: A Viral Game About Paperclips Teaches You to Be a World-Killing AI | WIRED, by Adam Rogers

RE: Universal Paperclips, by Frank Lantz (director of the New York University Games Center), Everybody House Games

RE: Paperclip maximizer, described by Nick Bostrom

Paperclips is a simple clicker game that manages to turn you into an artificial intelligence run amok.

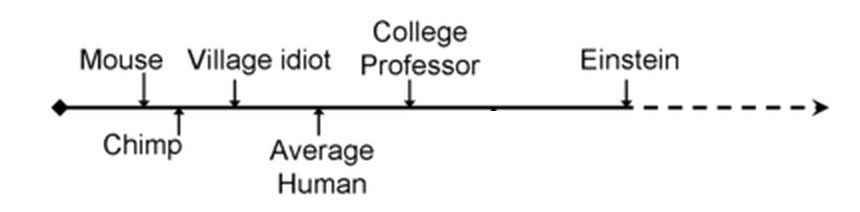

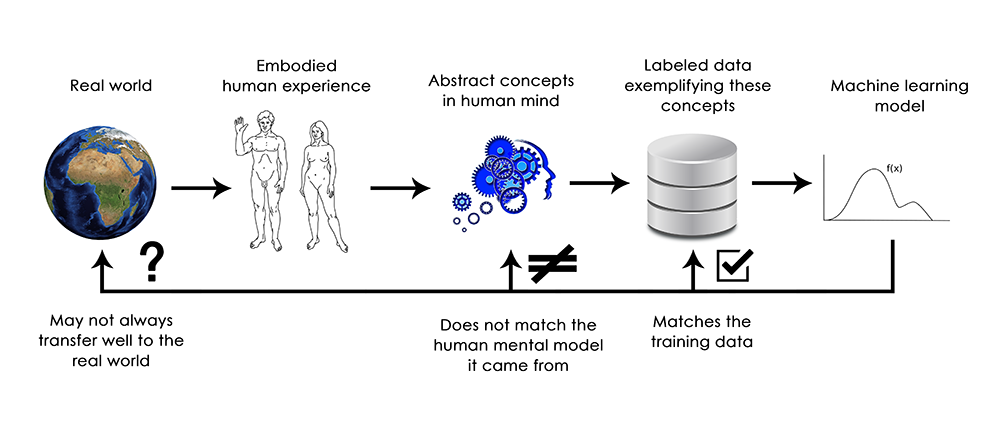

“The idea isn’t that a paperclip factory is likely to have the most advanced research AI in the world. The idea is to express the orthogonality thesis, which is that you can have arbitrarily great intelligence hooked up to any goal,” Yudkowsky says.

in a more literary sense, you play the AI because you must. Gaming, Lantz had realized, embodies the orthogonality thesis. When you enter a gameworld, you are a superintelligence aimed at a goal that is, by definition, kind of prosaic.

“When you play a game—really any game, but especially a game that is addictive and that you find yourself pulled into—it really does give you direct, first-hand experience of what it means to be fully compelled by an arbitrary goal,” Lantz says. Games don’t have a why, really. Why do you catch the ball? Why do want to surround the king, or box in your opponent’s counters? What’s so great about Candyland that you have to get there first? Nothing. It’s just the rules.