Source: AI winter is well on its way, by Filip Piekniewski

OK, so we can now train AlexNet in minutes rather than days, but can we train a 1000x bigger AlexNet in days and get qualitatively better results? Apparently not…

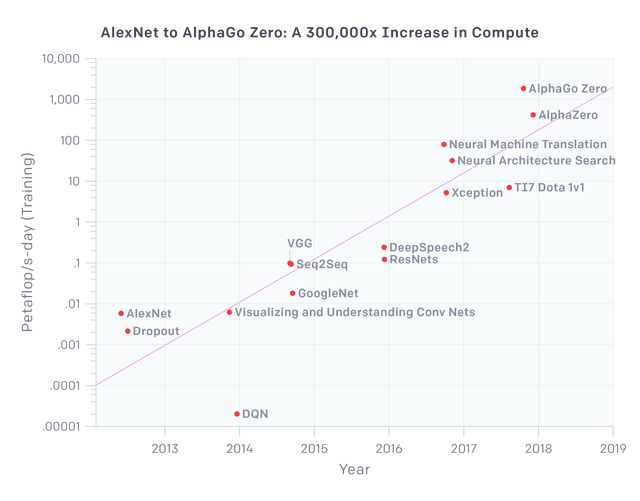

So in fact, this graph which was meant to show how well deep learning scales, indicates the exact opposite. We can’t just scale up AlexNet and get respectively better results – we have to fiddle with specific architectures, and effectively additional compute does not buy much without order of magnitude more data samples, which are in practice only available in simulated game environments.