Source: Complicating the Narratives – The Whole Story

What if journalists covered controversial issues differently — based on how humans actually behave when they are polarized and suspicious? … The idea is to revive complexity in a time of false simplicity.

How did you come to have your political views?

Haidt identifies six moral foundations that form the basis of political thought: care, fairness, liberty, loyalty, authority and sanctity.

What is dividing us?

How should we decide?

How did you come to that?

What is oversimplified about this issue?

How has this conflict affected your life?

What do you think the other side wants?

What’s the question nobody is asking?

listen not just to what [people] say — but to their “gap words,” or the things that they don’t say.

…

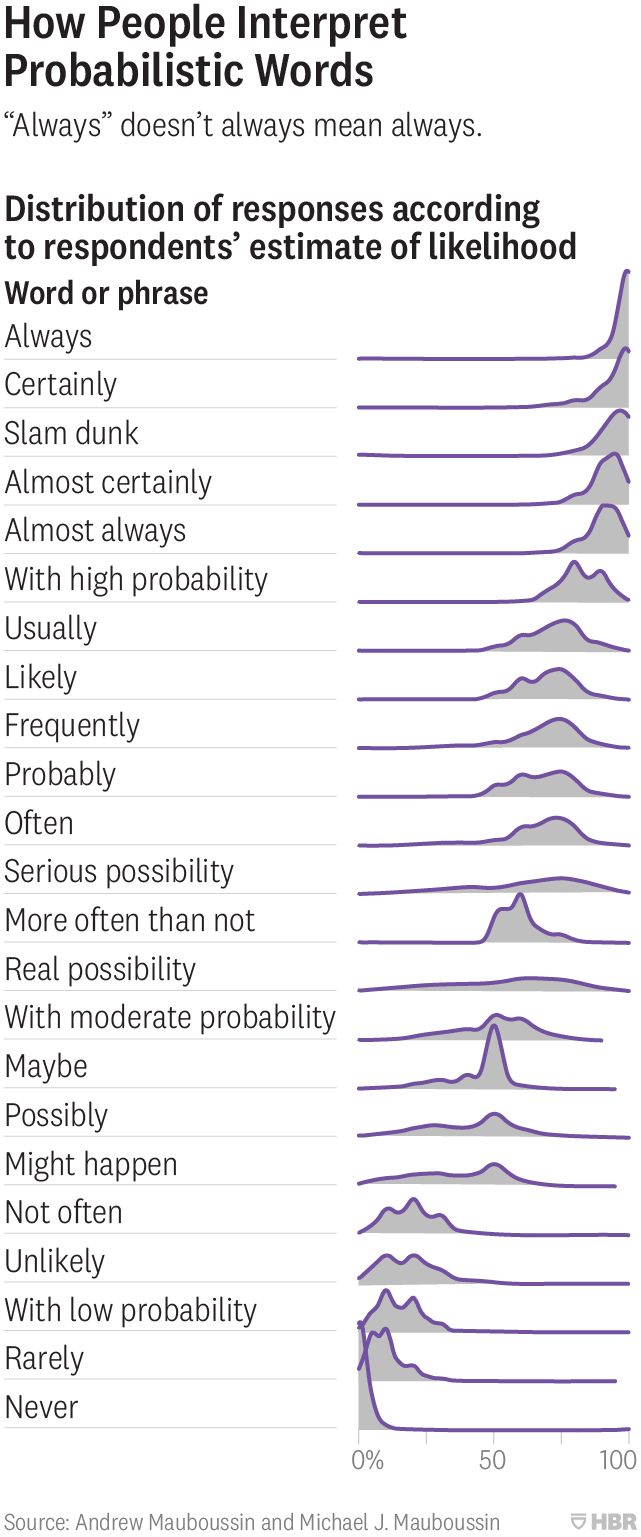

listen for specific clues or “signposts,” which are usually symptoms of deeper, hidden meaning. Signposts include words like “always” or “never,” any sign of emotion, the use of metaphors, statements of identity, words that get repeated or any signs of confusion or ambiguity. When you hear one of these clues, identify it explicitly and ask for more.

…

double check — give the person a distillation of what you thought they meant and see what they say.